The following piece was created by the AI model “BabbleCPD,” prompted to create an article written by a grumpy old professor, out-of-touch with technology and society. Its chosen subject: “What impact is AI/ChatGPT having on academic life?” Seems reasonable…

I get asked that a lot. It makes me somewhat uncomfortable because the truthful answer is “I don’t know.” Since ChatGPT’s arrival last year, it’s been a recurrent topic in the media. I try to avoid such conversations. We do not have any real sense of what the long-run implications of such innovations are but “it depends” appears not to be an allowable answer and every consultancy, investor, commentator and academic has to have an instant view. But I don’t know, and nor do those who claim they do. Some have more information than others, and some understand the technology better than others. Many have very little knowledge and no sense of the technology at all, and some may be more correct than others. But they really don’t know.

Events and shocks generate a sudden awareness of fundamental trends that had been hiding in plain sight; the pandemic brought awareness of the impact of e-commerce on retail. The technology that we used to work from home was all in place before 2020 (whatever happened to Skype?), and the Midlands was covered in logistic warehouses. To an extent, that’s true of ChatGPT, too. I have a large collection of articles on AI and its impact on work, employment, and society – why did it move from an esoteric subject to front page news? Large language models and generative AI structures were hardly new.

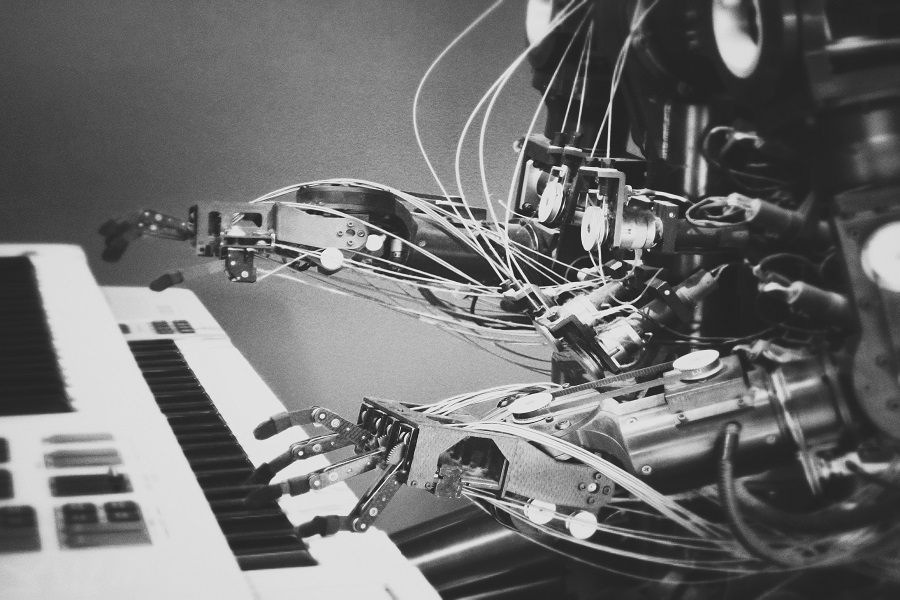

What OpenAI produced was a ChatBot front end to their Generative Pre-Trained Transformer that, accessibly, allowed untrained users to tap into the power of the AI sitting behind it. It is the front end that promotes the interest. That is not to diminish the significance, but it is what is in the background that has the economic and social impact. ChatGPT, by being accessible and easy to use, forces us to confront that future. But we don’t know how transformative it is: it is hardly the first technological innovation that “changes everything.”

To the question – the obvious academic issue relates to assessment; what happens if (when) students use ChatGPT to produce answers? There are stories of ChatGPT producing degree standard answers to tasks, but little systematic evidence. Nonetheless, the threat to academic integrity is definite; a survey by the Cambridge student newspaper claimed that half the students had already used it. While bold statements are made about detection and punishment — “content produced by AI platforms, such as ChatGPT, does not represent the student’s own original work so would be considered a form of academic misconduct,” — the general reaction has been to ignore it or assume it is a problem for someone else.

Firstly, “our exam questions are too clever/specialised for any program to produce good answers” is almost certainly untrue; the sources available to large language models and their ability to synthesise material must call that into question. Next, “we will be able to detect it.” Again, I am not convinced. There’s a sense that if the answer is well structured and written, then it probably has not been produced by a student under exam pressure! But it would be hard to prove that.

More promisingly, at least in its current iteration, ChatGPT tends to “make up” material that fits the argument, including inventing plausible, but imaginary references. So, all markers will pick this up… will they? This hugely overstates the capacity and conscientiousness of markers, facing large piles of answers and tight deadlines. You might pick up obviously false ones, though others will get through. Moreover, this will be fixed in later releases as capacity increases and reinforcement processes improve responsiveness.

For pessimists/realists, this suggests that we need to rethink our assessment procedures. We could return to unseen exams in dusty gymnasiums (but then, what exactly are we testing – an ability to write with a pen? Memorisation?) or find technological fixes that isolate the student digitally (who has the technological resources to implement that for an entire student body?). Alternatively, we could accept that this is a tool that exists and isn’t going to go away and accommodate it in our processes. I still own a slide rule, but no-one would advocate not using calculators. I recall the resistance to using spreadsheets for valuations (what happened to all those Parry’s Tables?). Google is only 25 years old. These tools become part of how we live and work, so we need to incorporate them into our educational processes.

There have been some interesting experiments reported where students are required to use ChatGPT or equivalent to produce a first draft: and then to develop it and critique it as the main part of the exercise. That seems, in principle, admirable, as it both makes use of the tool and develops the critical, analytic skills that are needed to use it effectively and transcend its limitations. It is, though, very resource intensive and emphasises skills that many high school leavers have not acquired in a hurdle jumping, cut-and-paste culture.

More critically, these tools will transform work, but we absolutely do not know to what extent: estimates of the impact vary hugely. The World Economic Forum argued that up to 85 million jobs might be lost but another 97 million jobs created by AI. Goldman Sachs claimed that up to 300 million jobs would be affected and 18% if work globally could be computerised. Bjorn Eriksen argued that more than half of office-based jobs will be automated. Daniel Autor produced similarly dramatic figures. Both note that, whereas other recent technological innovations have targeted routine jobs, these tools affect more professional, white-collar occupations. They are a productivity tool but one that reduces the need for employment. It also reduces the value of some prized skillsets, such as the ability to synthesise information and produce effective text distilling that process. So, the skills that graduates (and their potential employers) need may well be those that add value to the output of AI front ends, not that replicate them or fight against them but treat them as productivity tools while remaining open-eyed to their risks.

Note: One of those references in the final paragraph is invented. Do you know which one?

Disclaimer: “BabbleCPD” is a fabricated AI model parody of ChatGPT.